Leading Senior Constable Dr Janis Dalins is on the lookout for 100,000 joyful pictures of kids – a toddler in a sandpit, a nine-year-old successful an award in school, a sullen teenager unwrapping a gift at Christmas and pretending to not care.

The seek for these secure, joyful photos is the purpose of a brand new marketing campaign to crowdsource a database of ethically obtained pictures that Dalins hopes will assist construct higher investigative instruments to make use of within the combat towards what some have referred to as a “tsunami” of kid sexual assault materials on-line.

Dalins is the co-director of AiLecs lab, a collaboration between Monash College and the Australian federal police, which builds synthetic intelligence applied sciences to be used by legislation enforcement.

In its new My Photos Matter marketing campaign, individuals above 18 are being requested to share secure pictures of themselves at completely different phases of their childhood. As soon as uploaded with data figuring out the age and individual within the picture, these will go right into a database of different secure pictures. Finally a machine studying algorithm will probably be made to learn this album time and again till it learns what a toddler appears to be like like. Then it may possibly go on the lookout for them.

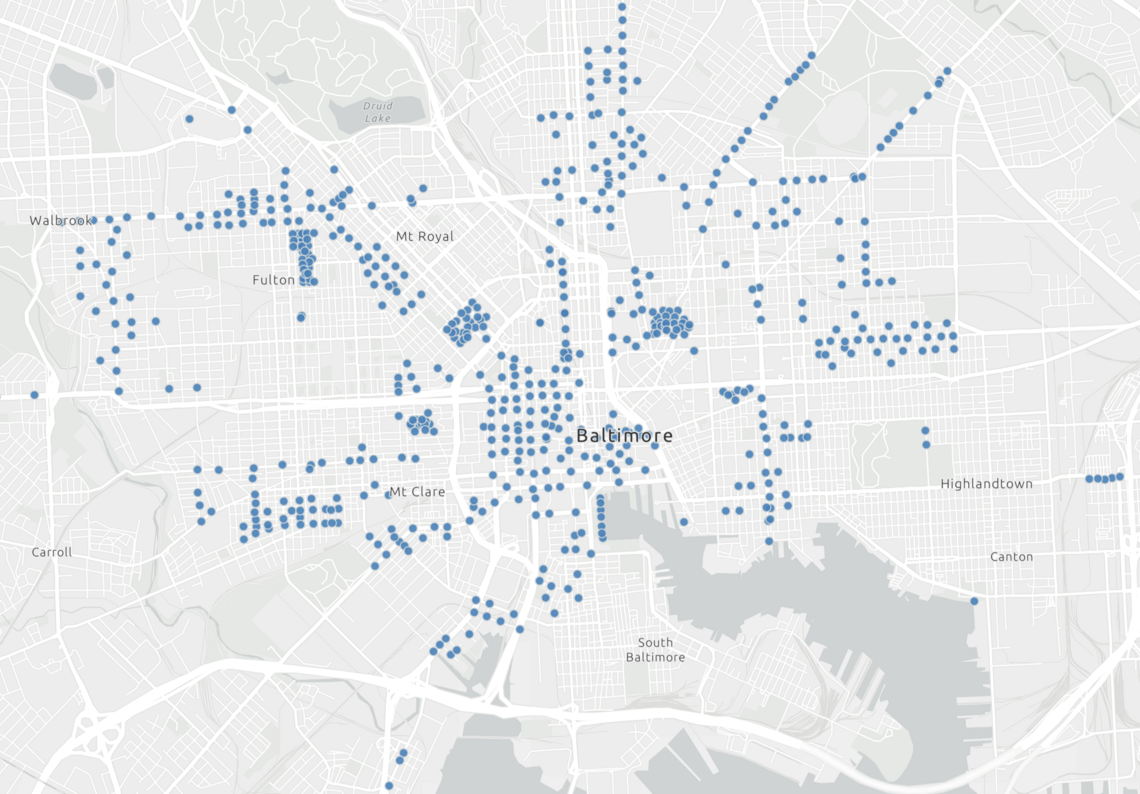

The algorithm will probably be used when a pc is seized from an individual suspected of possessing baby sexual abuse materials to shortly level to the place they’re most probably to seek out pictures of kids– an in any other case sluggish and labour-intensive course of that Dalins encountered whereas working in digital forensics.

“It was completely unpredictable,” he says. “An individual will get caught and also you assume you’ll discover a couple hundred photos, however it seems this man is a large hoarder and that’s after we’d spend days, weeks, months sorting by means of these things.”

“That’s the place the triaging is available in; [the AI] says if you wish to search for these things, look right here first as a result of the stuff that’s doubtless unhealthy is what try to be seeing first.” It should then be as much as an investigator to overview every picture flagged by the algorithm.

Monash College will retain possession of the {photograph} database and can impose strict restrictions on entry.

The AiLecs challenge is small and focused however is amongst a rising variety of machine studying algorithms legislation enforcement, NGOs, enterprise and regulatory authorities are deploying to fight the unfold of kid sexual abuse materials on-line.

These embrace these like SAFER, an algorithm developed by not-for-profit group Thorn that runs on an organization’s servers and identifies pictures on the level of add and web-crawlers like that operated by Venture Arachnid that trawls the web on the lookout for new troves of recognized baby sexual abuse materials.

No matter their operate, Dalins says the proliferation of those algorithms is a part of a wider technological “arms race” between baby sexual offenders and authorities.

“It’s a traditional state of affairs – the identical factor occurs in cybersecurity: you construct a greater encryption customary, a greater firewall, then somebody, someplace tries to seek out their method round it,” he says.

“[Online child abusers] have been among the most security-conscious individuals on-line. They have been much more superior than the terrorists, again in my day.”

‘A veritable tsunami’

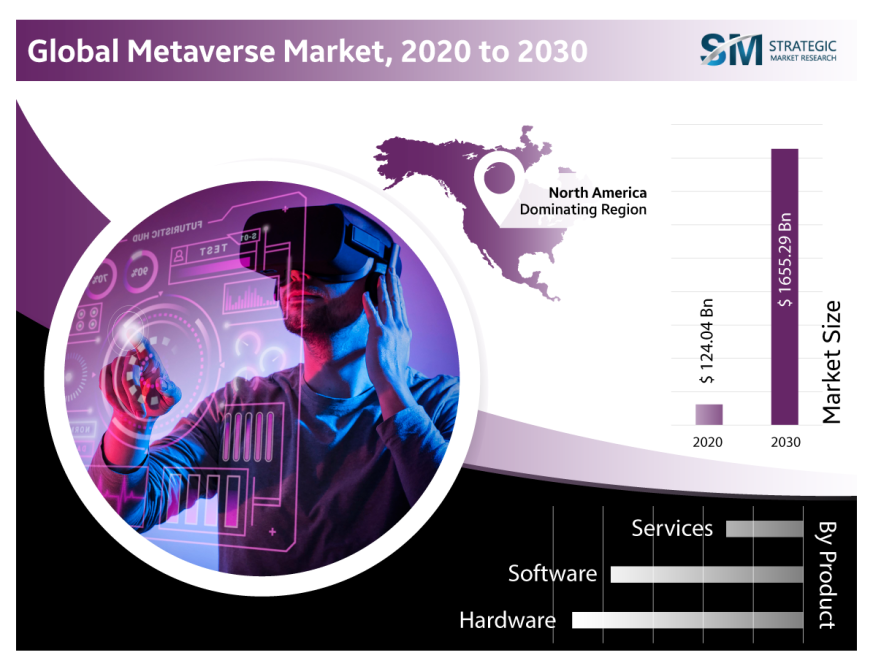

It’s an uncomfortable actuality that there’s extra baby sexual abuse materials being shared on-line at present that at any time for the reason that web was launched in 1983.

Authorities within the UK have confronted a 15-fold improve in reviews of on-line baby sexual abuse materials previously decade. In Australia the eSafety Fee described a 129% spike in reviews in the course of the early phases of the pandemic as “veritable tsunami of this surprising materials washing throughout the web”.

The performing esafety commissioner, Toby Dagg, advised Guardian Australia that the difficulty was a “international drawback” with related spikes recorded in the course of the pandemic in Europe and the US.

“It’s large,” Dagg says. “My private view is that it’s a slow-rolling disaster that doesn’t present any signal of slowing quickly.”

Although there’s a frequent notion that offenders are restricted to the again alleys of the web – the so-called darkish internet, which is closely watched by legislation enforcement businesses – Dagg says there was appreciable bleed into the industrial companies individuals use day-after-day.

Dagg says the total suite of companies “up and down the know-how stack” – social media, picture sharing, boards, cloud sharing, encryption, internet hosting companies – are being exploited by offenders, significantly the place “security hasn’t been embraced as a core tenet of business”.

The flood of reviews about baby sexual abuse materials has come as these companies have begun to search for it on their methods – most materials detected at present is already recognized to authorities as offenders accumulate and commerce them as “units”.

As many of those web firms are based mostly within the US, their reviews are made to the Nationwide Centre for Lacking and Exploited Kids (NCMEC), a non-profit organisation that coordinates reviews on the matter – and the outcomes from 2021 are telling. Fb reported 22m situations of kid abuse imagery on its servers in 2021. Apple, in the meantime, disclosed simply 160.

These reviews, nevertheless, don’t instantly translate into takedowns – every must be investigated first. Even the place entities like Fb make religion effort to report baby sexual abuse materials on their methods, the sheer quantity is overwhelming for authorities.

“It’s taking place, it’s taking place at scale and as a consequence, you must conclude that one thing has failed,” Dagg says. “We’re evangelists for the thought of security by design, that security must be constructed into a brand new service when bringing it to market.”

A basic design flaw

How this example developed owes a lot to how the web was constructed.

Traditionally, the unfold of kid sexual abuse materials in Australia was restricted owing to a mix of things, together with restrictive legal guidelines that managed the importation of grownup content material.

Offenders usually exploited present grownup leisure provide chains to import this materials and wanted to type trusted networks with different like-minded people to acquire it.

This meant that when one was caught, all have been caught.

The appearance of the web modified all the things when it created a frictionless medium of communication the place pictures, video and textual content could possibly be shared close to instantaneously to anybody, anyplace on the earth.

College of New South Wales criminologist Michael Salter says the event of social media solely took this a step additional.

“It’s a bit like organising a kindergarten in a nightclub. Unhealthy issues are going to occur,” he says.

Slater says a “naive futurism” among the many early architects of the web assumed the perfect of each person and failed to think about how unhealthy religion actors may exploit the methods they have been constructing.

A long time later, offenders have turn out to be very efficient at discovering methods to share libraries of content material and type devoted communities.

Slater says this legacy lives on, as many companies don’t search for baby sexual abuse materials of their methods and people who do usually scan their servers periodically reasonably than take preventive steps like scanning recordsdata on the level of add.

In the meantime, as authorities catch as much as this actuality, there are additionally murky new frontiers being opened up by know-how.

Lara Christensen, a senior lecturer in criminology with the College of the Sunshine Coast, says “digital baby sexual assault materials” – video, pictures or textual content of any one who is or seems to be a toddler – poses new challenges.

“The important thing phrases there are ‘seems to be’,” Christensen says. “Australian laws extends past defending precise kids and it acknowledges it could possibly be a gateway to different materials.”

Although this sort of materials has existed for some years, Christensen’s concern is that extra subtle applied sciences are opening up a complete new spectrum of offending: practical computer-generated pictures of kids, actual pictures of kids made to look fictional, deep fakes, morphed images and text-based tales.

She says every creates new alternatives to immediately hurt kids and/or try and groom them. “It’s all about accessibility, anonymity and affordability,” Christensen says. “Once you put these three issues within the combine, one thing can turn out to be an enormous drawback.”

A human within the loop

During the last decade, the advanced arithmetic behind algorithms combating the wave of this felony materials have developed considerably however they’re nonetheless not with out points.

One of many greatest issues is that it’s usually not possible to know the place the non-public sector has obtained the pictures it has used to coach its AI. These might embrace pictures of kid sexual abuse or pictures scraped from open social media accounts with out the consent of those that uploaded them. Algorithms developed by legislation enforcement have historically relied on pictures of abuse captured from offenders.

This runs the chance of re-traumatising survivors whose pictures are getting used with out their consent and baking within the biases of the algorithms’ creators due to an issue often known as “overfitting” – a scenario the place algorithms educated on unhealthy or restricted information return unhealthy outcomes.

In different phrases: train an algorithm to search for apples and it might discover you an Apple iPhone.

“Computer systems will study precisely what you train them,” Dalins says.

That is what the AiLecs lab is trying to show with its My Photos Matter marketing campaign: that it’s potential to construct these important instruments with the total consent and cooperation of these whose childhood pictures are getting used.

However for all of the advances in know-how, Dalins says baby sexual abuse investigation will all the time require human involvement.

“We’re not speaking about figuring out stuff in order that algorithm says x and that’s what goes to court docket,” he says. “We’re not seeing a time within the subsequent, 5, 10 years the place we might fully automate a course of like this.

“You want a human within the loop.”