[[DownloadsSidebar]]

Software program has been the star of excessive tech over the previous few many years, and it’s simple to know why. With PCs and cellphones, the game-changing improvements that outlined this period, the structure and software program layers of the know-how stack enabled a number of vital advances. On this atmosphere, semiconductor corporations have been in a troublesome place. Though their improvements in chip design and fabrication enabled next-generation units, they acquired solely a small share of the worth coming from the know-how stack—about 20 to 30 % with PCs and 10 to twenty % with cell.

However the story for semiconductor corporations might be completely different with the expansion of synthetic intelligence (AI)—usually outlined as the flexibility of a machine to carry out cognitive capabilities related to human minds, corresponding to perceiving, reasoning, and studying. Many AI functions have already gained a large following, together with digital assistants that handle our houses and facial-recognition applications that monitor criminals. These various options, in addition to different rising AI functions, share one frequent characteristic: a reliance on {hardware} as a core enabler of innovation, particularly for logic and reminiscence capabilities.

What is going to this growth imply for semiconductor gross sales and revenues? And which chips might be most vital to future improvements? To reply these questions, we reviewed present AI options and the know-how that permits them. We additionally examined alternatives for semiconductor corporations throughout all the know-how stack. Our evaluation revealed three vital findings about worth creation:

- AI might permit semiconductor corporations to seize 40 to 50 % of complete worth from the know-how stack, representing the very best alternative they’ve had in many years.

- Storage will expertise the very best development, however semiconductor corporations will seize most worth in compute, reminiscence, and networking.

- To keep away from errors that restricted worth seize prior to now, semiconductor corporations should undertake a brand new value-creation technique that focuses on enabling personalized, end-to-end options for particular industries, or “microverticals.”

By maintaining these beliefs in thoughts, semiconductor leaders can create a brand new street map for profitable in AI. This text begins by reviewing the alternatives that they’ll discover throughout the know-how stack, specializing in the influence of AI on {hardware} demand at information facilities and the sting (computing that happens with units, corresponding to self-driving automobiles). It then examines particular alternatives inside compute, reminiscence, storage, and networking. The article additionally discusses new methods that may assist semiconductor corporations achieve a bonus within the AI market, in addition to points they need to take into account as they plan their subsequent steps.

The AI know-how stack will open many alternatives for semiconductor corporations

AI has made important advances since its emergence within the Fifties, however a number of the most vital developments have occurred not too long ago as builders created subtle machine-learning (ML) algorithms that may course of massive information units, “study” from expertise, and enhance over time. The best leaps got here within the 2010s due to advances in deep studying (DL), a kind of ML that may course of a wider vary of knowledge, requires much less information preprocessing by human operators, and infrequently produces extra correct outcomes.

To know why AI is opening alternatives for semiconductor corporations, take into account the know-how stack (Exhibit 1). It consists of 9 discrete layers that allow the 2 actions that allow AI functions: coaching and inference (see sidebar “Coaching and inference”). When builders try to enhance coaching and inference, they typically encounter roadblocks associated to the {hardware} layer, which incorporates storage, reminiscence, logic, and networking. By offering next-generation accelerator architectures, semiconductor corporations might improve computational effectivity or facilitate the switch of enormous information units via reminiscence and storage. As an illustration, specialised reminiscence for AI has 4.5 instances extra bandwidth than conventional reminiscence, making it a lot better suited to dealing with the huge shops of huge information that AI functions require. This efficiency enchancment is so nice that many purchasers can be extra keen to pay the upper value that specialised reminiscence requires (about $25 per gigabyte, in contrast with $8 for traditional reminiscence).

Exhibit 1

AI will drive a big portion of semiconductor revenues for information facilities and the sting

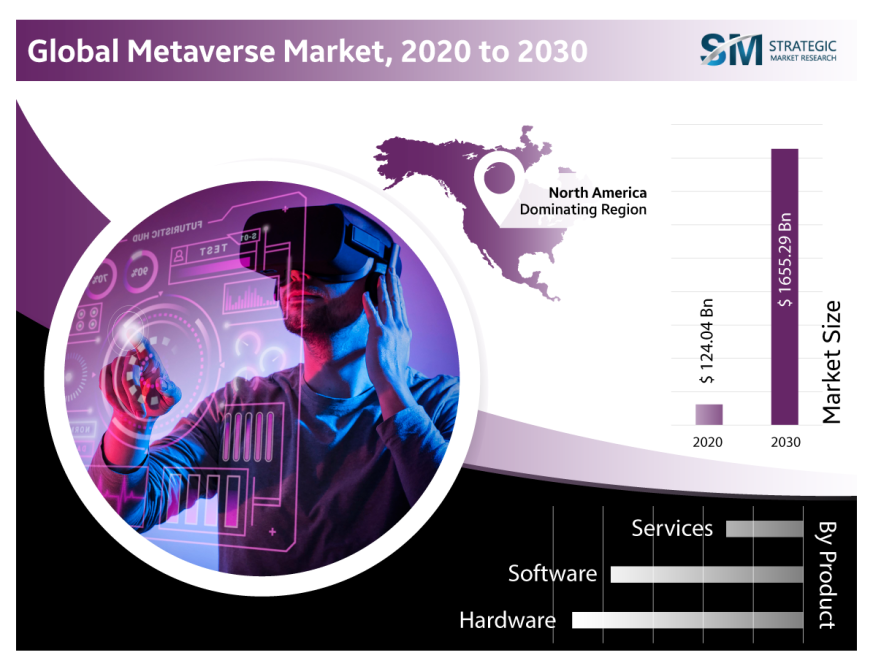

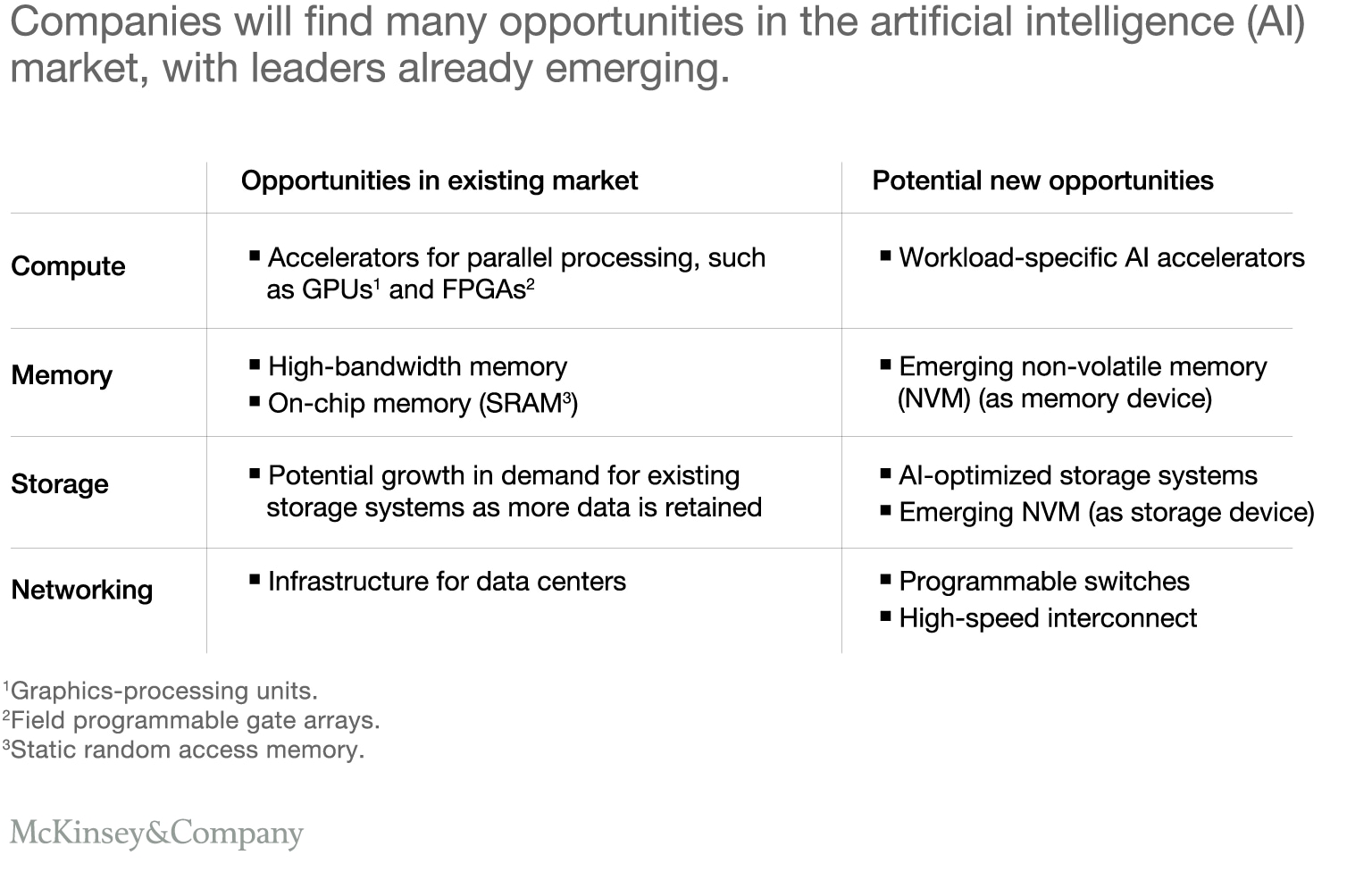

With {hardware} serving as a differentiator in AI, semiconductor corporations will discover better demand for his or her present chips, however they may additionally revenue by growing novel applied sciences, corresponding to workload-specific AI accelerators (Exhibit 2). We created a mannequin to estimate how these AI alternatives would have an effect on revenues and to find out whether or not AI-related chips would represent a good portion of future demand (see sidebar “How we estimated worth” for extra data on our methodology).

Exhibit 2

Our analysis revealed that AI-related semiconductors will see development of about 18 % yearly over the following few years—5 instances better than the speed for semiconductors utilized in non-AI functions (Exhibit 3). By 2025, AI-related semiconductors might account for nearly 20 % of all demand, which might translate into about $67 billion in income. Alternatives will emerge at each information facilities and the sting. If this development materializes as anticipated, semiconductor corporations might be positioned to seize extra worth from the AI know-how stack than they’ve obtained with earlier improvements—about 40 to 50 % of the overall.

Exhibit 3

AI will drive most development in storage, however the very best alternatives for value-creation lie in different segments

We then took our evaluation a bit additional by taking a look at particular alternatives for semiconductor gamers inside compute, reminiscence, storage, and networking. For every space, we examined how {hardware} demand is evolving at each information facilities and the sting. We additionally quantified the expansion anticipated in every class besides networking, the place AI-related alternatives for worth seize might be comparatively small for semiconductor corporations.

Exhibit 4

Compute

Compute efficiency depends on central processing items (CPUs) and accelerators—graphics-processing items (GPUs), discipline programmable gate arrays (FPGAs), and application-specific built-in circuits (ASICs). Since every use case has completely different compute necessities, the optimum AI {hardware} structure will fluctuate. As an illustration, route-planning functions have completely different wants for processing velocity, {hardware} interfaces, and different efficiency options than functions for autonomous driving or monetary threat stratification (Exhibit 4).

Exhibit 5

General, demand for compute {hardware} will improve by about 10 to fifteen % via 2025 (Exhibit 5). After analyzing greater than 150 DL use circumstances, taking a look at each inference and coaching necessities, we have been in a position to determine the architectures most probably to realize floor in information facilities and the sting (Exhibit 6).

Exhibit 6

Information-center utilization. Most compute development will stem from larger demand for AI functions at cloud-computing information facilities. At these areas, GPUs are actually used for nearly all coaching functions. We count on that they’ll quickly start to lose market share to ASICs, till the compute market is about evenly divided between these options by 2025. As ASICs enter the market, GPUs will doubtless turn into extra personalized to satisfy the calls for of DL. Along with ASICs and GPUs, FPGAs could have a small position in future AI coaching, largely for specialised data-center functions that should attain the market rapidly or require customization, corresponding to these for prototyping new DL functions.

For inference, CPUs now account for about 75 % of the market. They’ll lose floor to ASICs as DL functions achieve traction. Once more, we count on to see an nearly equal divide within the compute market, with CPUs accounting for 50 % of demand in 2025 and ASICs for 40 %.

Edge functions. Most edge coaching now happens on laptops and different private computer systems, however extra units could start recording information and enjoying a job in on-site coaching. As an illustration, drills used throughout oil and fuel exploration generate information associated to a effectively’s geological traits that might be used to coach fashions. For accelerators, the coaching market is now evenly divided between CPUs and ASICs. Sooner or later, nonetheless, we count on that ASICs constructed into techniques on chips will account for 70 % of demand. FPGAs will symbolize about 20 % of demand and might be used for functions that require important customization.

In the case of inference, most edge units now depend on CPUs or ASICs, with a number of functions—corresponding to autonomous automobiles—requiring GPUs. By 2025, we count on that ASICs will account for about 70 % of the sting inference market and GPUs 20 %.

Reminiscence

AI functions have excessive memory-bandwidth necessities, since computing layers inside deep neural networks should go enter information to 1000’s of cores as rapidly as doable. Reminiscence is required—usually dynamic random entry reminiscence (DRAM)—to retailer enter information, weight mannequin parameters, and carry out different capabilities throughout each inference and coaching. Contemplate a mannequin being educated to acknowledge the picture of a cat. All intermediate leads to the popularity course of—for instance, colours, contours, textures—have to reside on reminiscence because the mannequin fine-tunes its algorithms. Given these necessities, AI will create a powerful alternative for the reminiscence market, with worth anticipated to extend from $6.4 billion in 2017 to $12.0 billion in 2025.

That mentioned, reminiscence will see the bottom annual development of the three accelerator classes—about 5 to 10 %—due to efficiencies in algorithm design, corresponding to lowered bit precision, in addition to capability constraints within the {industry} stress-free.

Most short-term reminiscence development will consequence from elevated demand at information facilities for the high-bandwidth DRAM required to run AI, ML, and DL algorithms. However over time, the demand for AI reminiscence on the edge will improve—as an illustration, related automobiles might have extra DRAM.

Present reminiscence is usually optimized for CPUs, however builders are actually exploring new architectures. Options which might be attracting extra curiosity embody the next:

- Excessive-bandwidth reminiscence (HBM). This know-how permits AI functions to course of massive information units at most velocity whereas minimizing energy necessities. It permits DL compute processors to entry a 3 dimensional stack of reminiscence via a quick connection known as through-silicon by way of (TSV). AI chip leaders corresponding to Google and Nvidia have adopted HBM as the popular reminiscence answer, though it prices 3 times greater than conventional DRAM per gigabyte—a transfer that alerts their prospects are keen to pay for costly AI {hardware} in return for efficiency features.

- On-chip reminiscence. For a DL compute processor, storing and accessing information in DRAM or different outdoors reminiscence sources can take 100 instances extra time than reminiscence on the identical chip. When Google designed the tensor-processing unit (TPU), an ASIC specialised for AI, it included sufficient reminiscence to retailer a complete mannequin on the chip.

Begin-ups corresponding to Graphcore are additionally growing on-chip reminiscence capability, taking it to a stage about 1,000 instances greater than what’s discovered on a typical GPU, via a novel structure that maximizes the velocity of AI calculations. The price of on-chip reminiscence continues to be prohibitive for many functions, and chip designers should deal with this problem.

Storage

AI functions generate huge volumes of knowledge—about 80 exabytes per yr, which is anticipated to extend to 845 exabites by 2025. As well as, builders are actually utilizing extra information in AI and DL coaching, which additionally will increase storage necessities. These shifts might result in annual development of 25 to 30 % from 2017 to 2025 for storage—the very best charge of all segments we examined.

Producers will improve their output of storage accelerators in response, with pricing depending on provide staying in sync with demand.

In contrast to conventional storage options that are likely to take a one-size-fits-all strategy throughout completely different use circumstances, AI options should adapt to altering wants—and people depend upon whether or not an utility is used for coaching or inference. As an illustration, AI coaching techniques should retailer huge volumes of knowledge as they refine their algorithms, however AI inference techniques solely retailer enter information that is likely to be helpful in future coaching. General, demand for storage might be larger for AI coaching than inference.

One potential disruption in storage is new types of non-volatile reminiscence (NVM). New types of NVM have traits that fall between conventional reminiscence, corresponding to DRAM, and conventional storage, corresponding to NAND flash. They will promise larger density than DRAM, higher efficiency than NAND, and higher energy consumption than each. These traits will allow new functions and permit NVM to substitute for DRAM and NAND in others. The marketplace for these types of NVM are at the moment small—representing about $1 billion to $2 billion in income over the following two years—however it’s projected to account for greater than $10 billion in income by 2025.

The NMV class consists of a number of applied sciences, all of which differ by way of reminiscence entry time and price, and are all in varied phases. Magnetoresistive random-access reminiscence (MRAM) has the bottom latency for learn and write, with better than five-year information retention and glorious endurance. Nevertheless, its capability scaling is restricted, making it a expensive different which may be used for incessantly accessed caches relatively than a long-term data-retention answer. Resistive random-access reminiscence (ReRAM) might doubtlessly scale vertically, giving it a bonus in scaling and price, but it surely has slower latency and lowered endurance. Part-change reminiscence (PCM) suits in between the 2, with 3D XPoint being essentially the most well-known instance. Endurance and error charge might be key limitations that should be overcome earlier than extra widespread adoption.

Networking

AI functions require many servers throughout coaching, and the quantity will increase with time. As an illustration, builders solely want one server to construct an preliminary AI mannequin and below 100 to enhance its construction. However coaching with actual information—the logical subsequent step—might require a number of hundred. Autonomous-driving fashions require over 140 servers to achieve 97 % accuracy in detecting obstacles.

If the velocity of the community connecting servers is gradual—as is normally the case—it’s going to trigger coaching bottlenecks. Though most methods for enhancing community velocity now contain data-center {hardware}, builders are investigating different choices, together with programmable switches that may route information in several instructions. This functionality will speed up one of the vital vital coaching duties: the necessity to resynchronize enter weights amongst a number of servers at any time when mannequin parameters are up to date. With programmable switches, resynchronization can happen nearly immediately, which might improve coaching velocity from two to 10 instances. The best efficiency features would include massive AI fashions, which use essentially the most servers.

An alternative choice to enhance networking includes utilizing high-speed interconnections in servers. This know-how can produce a threefold enchancment in efficiency, but it surely’s additionally about 35 % costlier.

Semiconductor corporations want new methods for the AI market

It’s clear that alternatives abound, however success isn’t assured for semiconductor gamers. To seize the worth they deserve, they’ll have to concentrate on end-to-end options for particular industries (additionally known as microvertical options), ecosystem growth, and innovation that goes far past enhancing compute, reminiscence, and networking applied sciences.

Clients will worth end-to-end options for microverticals that ship a powerful return on funding

AI {hardware} options are solely helpful in the event that they’re suitable with all different layers of the know-how stack, together with the options and use circumstances within the companies layer. Semiconductor corporations can take two paths to realize this aim, and some have already begun doing so. First, they may work with companions to develop AI {hardware} for industry-specific use circumstances, corresponding to oil and fuel exploration, to create an end-to-end answer. For instance, Mythic has developed an ASIC to assist edge inference for image- and voice-recognition functions inside the healthcare and navy industries. Alternatively, semiconductor corporations might concentrate on growing AI {hardware} that permits broad, cross {industry} options, as Nvidia does with GPUs.

The trail taken will fluctuate by phase. With reminiscence and storage gamers, options are likely to have the identical know-how necessities throughout microverticals. In compute, in contrast, AI algorithm necessities could fluctuate considerably. An edge accelerator in an autonomous automobile should course of a lot completely different information from a language-translation utility that depends on the cloud. Underneath these circumstances, corporations can’t depend on different gamers to construct different layers of the stack that might be suitable with their {hardware}.

Lively participation in ecosystems is significant for achievement

Semiconductor gamers might want to create an ecosystem of software program builders that favor their {hardware} by providing merchandise with huge attraction. In return, they’ll have extra affect over design decisions. As an illustration, builders preferring a sure {hardware} will use that as a place to begin when constructing their functions. They’ll then search for different parts which might be suitable with it.

To assist draw software program builders into their ecosystem, semiconductor corporations ought to cut back complexity at any time when doable. Since there are actually extra varieties of AI {hardware} than ever, together with new accelerators, gamers ought to provide easy interfaces and software-platform capabilities. As an illustration, Nvidia offers builders with Compute Unified Machine Structure, a parallel-computing platform and utility programming interface (API) that works with a number of programming languages. It permits software program builders to make use of Compute Unified Machine Structure–enabled GPUs for general-purpose processing. Nvidia additionally offers software program builders with entry to a group of primitives to be used in DL functions. The platform has now been deployed throughout 1000’s of functions.

Inside strategically vital {industry} sectors, Nvidia additionally provides personalized software-development kits. To help with the event of software program for self-driving automobiles, as an illustration, Nvidia created DriveWorks, a package with ready-to- use software program instruments, together with object-detection libraries that may assist functions interpret information from cameras and sensors in self-driving automobiles.

As desire for sure {hardware} architectures builds all through the developer group, semiconductor corporations will see their visibility soar, leading to higher model recognition. They’ll additionally see larger adoption charges and better buyer loyalty, leading to lasting worth.

Solely platforms that add actual worth to finish customers will be capable to compete towards complete choices from massive high-tech gamers, corresponding to Google’s TensorFlow, an open-source library of ML and DL fashions and algorithms.

TensorFlow helps Google’s core merchandise, corresponding to Google Translate, and in addition helps the corporate solidify its place inside the AI know-how stack, since TensorFlow is suitable with a number of compute accelerators

Innovation is paramount and gamers should go up the stack

Many {hardware} gamers who wish to allow AI innovation concentrate on enhancing the computation course of. Historically, this technique has concerned providing optimized compute accelerators or streamlining paths between compute and information via improvements in reminiscence, storage, and networking. However {hardware} gamers ought to transcend these steps and search different types of innovation by going up the stack. For instance, AI-based facial-recognition techniques for safe authentication on smartphones have been enabled by specialised software program and a 3-D sensor that initiatives 1000’s of invisible dots to seize a geometrical map of a person’s face. As a result of these dots are a lot simpler to course of than a number of tens of millions of pixels from cameras, these authentication techniques work in a fraction of a second and don’t intrude with the person expertise. {Hardware} corporations might additionally take into consideration how sensors or different revolutionary applied sciences can allow rising AI use circumstances.

Semiconductor corporations should outline their AI technique now

Semiconductor corporations which might be first movers within the AI area might be extra prone to appeal to and retain prospects and ecosystem companions—and that might stop later entrants from attaining a number one place available in the market. With each main know-how gamers and start-ups launching impartial efforts within the AI {hardware} area now, the window of alternative for staking a declare will quickly shrink over the following few years. To determine a powerful technique now, they need to concentrate on three questions:

- The place to play? Step one to making a centered technique includes figuring out the goal {industry} microverticals and AI use circumstances. On the most elementary stage, this includes estimating the dimensions of the chance inside completely different verticals, in addition to the actual ache factors that AI options might remove. On the technical facet, corporations ought to determine in the event that they wish to concentrate on {hardware} for information facilities or the sting.

- Find out how to play? When bringing a brand new answer to market, semiconductor corporations ought to undertake a partnership mind-set, since they could achieve a aggressive edge by collaborating with established gamers inside particular industries. They need to additionally decide what organizational construction will work greatest for his or her enterprise. In some circumstances, they could wish to create teams that concentrate on sure capabilities, corresponding to R&D, for all industries. Alternatively, they may dedicate teams to pick out microverticals, permitting them to develop specialised experience.

- When to play? Many corporations is likely to be tempted to leap into the AI market, since the price of being a follower is excessive, notably with DL functions. Additional, limitations to entry will rise as industries undertake particular AI requirements and count on all gamers to stick to them. Whereas fast entry is likely to be the very best strategy for some corporations, others may wish to take a extra measured strategy that includes slowly growing their funding in choose microverticals over time.

The AI and DL revolution provides the semiconductor {industry} the best alternative to generate worth that it has had in many years. {Hardware} may be the differentiator that determines whether or not modern functions attain the market and seize consideration. As AI advances, {hardware} necessities will shift for compute, reminiscence, storage, and networking—and that can translate into completely different demand patterns. One of the best semiconductor corporations will perceive these tendencies and pursue improvements that assist take AI {hardware} to a brand new stage. Along with benefitting their backside line, they’ll even be a driving pressure behind the AI functions remodeling our world.